This is the first of a short series of posts highlighting the Masters Thesis by Florence Bürgy, Pratiques participatives et patrimoine numérisé : le cas des manuscrits. Réflexion en vue d’un projet de transcription collaborative en Ville de Genève.

Bürgy analyzed the crowdsourcing needs of several cultural heritage institutions in Geneva, Switzerland, interviewing experts, testing platforms, and defining recommendations for prospective projects. We'll be posting translated excerpts from her work which we think are of interest outside Geneva.

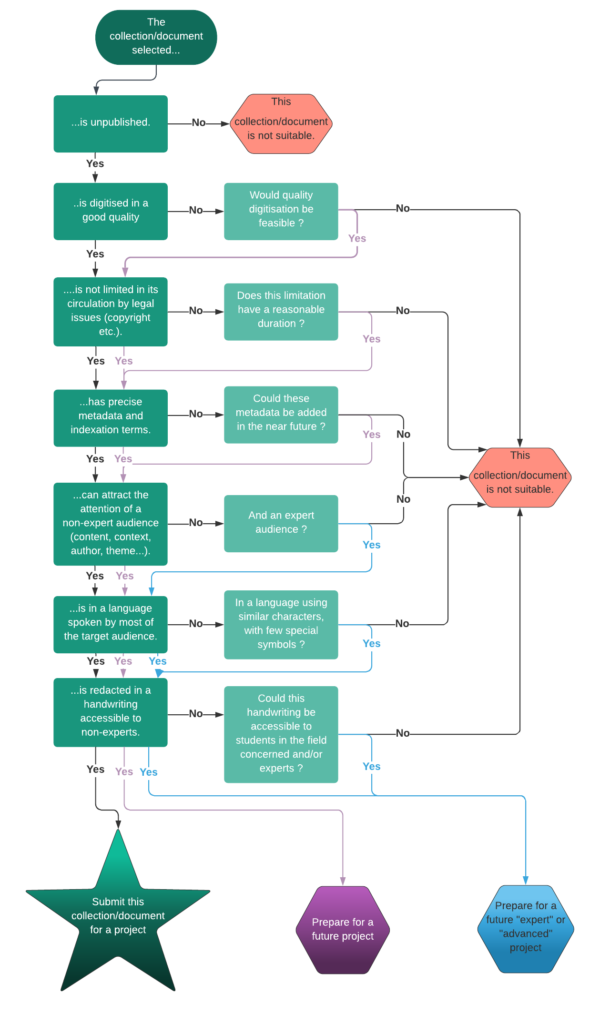

Burgy's recommendation sections cover several points, but her decision making guide for selecting material for crowdsourcing is really nice:

The suggested documents are quite varied (satirical newspapers, travel narratives, seal collections, civil registers and cemetery records, correspondence, entomological display labels, etc.), which is a good omen for the diversity of future projects.

Interviews conducted with people who led crowdsourcing projects in Switzerland show that that an initial project needs to “hit hard”, deal with current issues, and attract the attention of a wide variety of people. Documents will therefore need to be chosen with this in mind. Less “striking” projects can then be created, targeting more specific communities.

It remains important for each institution to be able to find their place with their own crowdsourcing projects, but also for institutions to collaborate, for example with projects that bring them together around one single theme.

Who chooses the documents remains a complex question. It goes without saying that the expertise of those responsible for collections will remain at the heart of each project, and the decision-making guide created for this should make this selection easier. However, in a spirit of participation, it would be conceivable to present users with preselected holdings identified by the institutions, to choose among them or even let them suggest holdings themselves.

As part of her research, Bürgy also conducted user tests on different existing crowdsourcing platforms to try to define desirable elements for projects.

The respondents tested Europeana, e-manuscripta, and Library of Congress: By the people. The key points raised were:

- Have a clear front page, so that people know where to click.

- Emphasize the visual, that which attracts the eye

- Make tutorials (video, ideally) and clear user guides available

- Make the transcription rules available at the click of a button

- Vary the types of documents, but present them in a logical order

- Make the progress of the transcription available for each document

- Clarify the review and validation system

- Add comments or specialist notes to the documents to place works in context

- Suggest links to external resources

- Link created data to existing databases, or even Wikipedia

- Try to work directly with an existing platform, rather than creating a completely new one

Translation credit: Adam Bishop