In a recent discussion on BlueSky, Ben and Scott Weingart (and others) had a conversation about the explosion of possibilities that might come from good, cheap, AI transcription. Scott asks “how the bias of the spotlight of what’s searchable will reshape how people will engage with the past. That is, how will history be remembered and […]

Main Content

Recent Posts from FromThePage

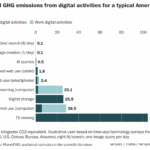

Environmental Impact of AI

At the Society of American Archivists conference last August, I joined a panel on artificial intelligence. The first question from the audience came quickly and, frankly, with a little heat: "How do you justify using energy-intensive tools like AI when the climate crisis is only getting worse?" That moment stuck with me. The archivists in […]

How Do You Know Whether AI Is "Good Enough"?

Yesterday we deployed two new features to help you evaluate Gemini 3 (and eventually others) results against human transcribed or corrected text. First, we’ve developed a comparison screen that shows the differences between an AI generated page transcription and human created ground truth: Next, we calculate statistics, again comparing the AI draft against the human […]

Introducing Gemini 3.0 Support in FromThePage

When Ben sent me Mark Humphries’ report on testing a new, unreleased Gemini model, I got scared. And excited. Mark is a historian and digital humanist who’s gone deep on analyzing AI tools for textual transcription. He understands the dangers of “seductive plausibility” in LLM outputs. He knows what researchers and historians need from archival text. […]

Is That Transcription Really Human?

Last month, Denyse Allen asked this question on the Genealogy and AI Facebook group:If volunteers use AI to transcribe documents, is that OK? I have strong opinions, but want to explain them. First off, the institutions running big crowdsourcing projects have staff who can automate sending all of their documents to AI engines for transcription. As a […]

AI and Crowdsourcing are Overturning Archival Workflows

March 2025 We were talking recently to Paige Roberts, the lone archivist at the Phillips Academy, and she said something interesting: "I just acquired a new collection. I'm kind of weird, I don't do processing, I just digitize it and throw it up on FromThePage, and boom, people transcribe it. If it's from 1790 you […]