I tend to call GPT-generated text “lardy” – too many words without a lot of meaning. GPT is in desperate need of a copy editor. For writing summarizations that might be used in a finding aid, this is NOT what you want. You want to capture the essence of a document or file in as concise a way as possible. Chain of Density Summarization, outlined in this paper, seems to be a way to make AI do this.

In this case, density is how full of meaning a paragraph is. Specifically, how many important details are captured in the summary. Adams, et al describe the approach as follows:

Specifically, GPT-4 generates an initial entity sparse summary before iteratively incorporating missing salient entities without increasing the length. Summaries generated by CoD are more abstractive, exhibit more fusion, and have less of a lead bias than GPT-4 summaries generated by a vanilla prompt.

The idea uses a fundamental strategy for working with a Large Language Model: iterating. In this case, you ask your LLM to summarize a document, then identify entities left out of of the summary, then re-summarize at the same length while integrating the missing entities. Repeat four times in all. At each iteration of the summary, there are fewer filler words and more specific entities mentioned.

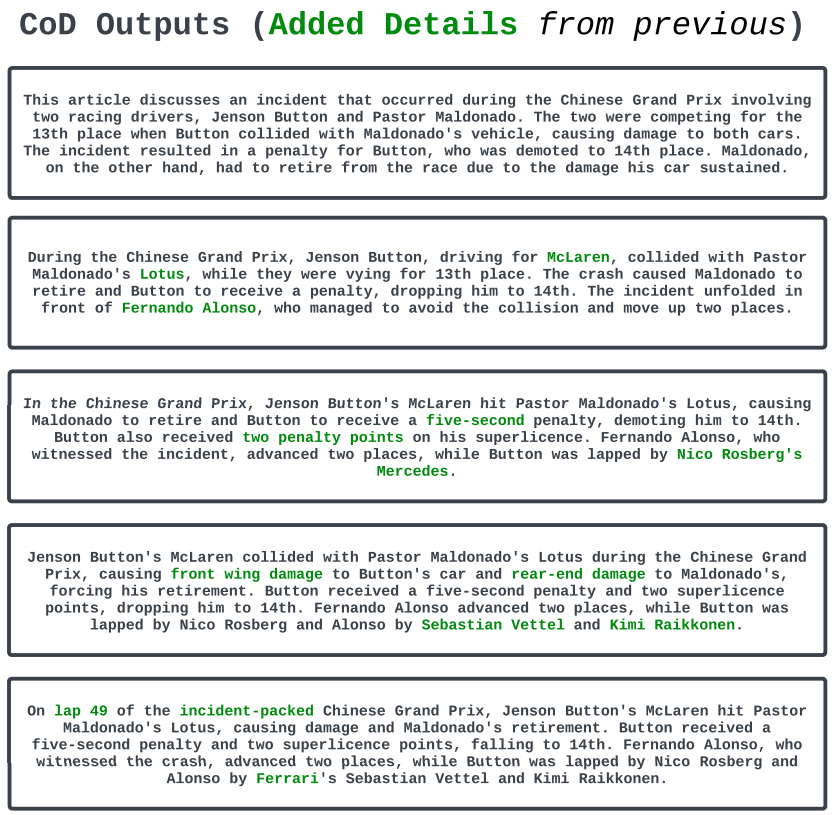

Here are the four increasingly dense summaries from the paper:

Notice how the first iteration contains only three entities, and by the fifth version we have sixteen, much more descriptive, entities.

Here’s the prompt:

Article: {Insert article}

You will generate increasingly concise entity-dense summaries of the above article. Repeat the following 2 steps 5 times.

Step 1: Identify 1-3 informative entities (delimited) from the article which are missing from the previously generated summary.

Step 2: Write a new denser summary of identical length which covers every entity and detail from the previous summary plus the missing entities.

A missing entity is:

- Relevant: to the main stories.

- Specific: descriptive yet concise (5 words or fewer).

- Novel: not in the previous summary.

- Faithful: present in the article.

- Anywhere: located in the article.

Guidelines:

- The first summary should be long (4-5 sentences, ~80 words), yet highly non-specific, containing little information beyond the entities marked as missing. Use overly verbose language and fillers (e.g., “this article discusses”) to reach ~80 words.

- Make every word count. Rewrite the previous summary to improve flow and make space for additional entities.

- Make space with fusion, compression, and removal of uninformative phrases like “the article discusses”.

- The summaries should become highly dense and concise, yet self-contained, e.g., easily understood without the article.

- Missing entities can appear anywhere in the new summary.

- Never drop entities from the previous summary. If space cannot be made, add fewer new entities.

Remember: Use the exact same number of words for each summary.

We’re experimenting with this approach, with the goal of implementing “AI summarization” of transcribed text in FromThePage. We’re hoping this could become an AI-assist to finding aid creation – but let me know what you think!

I first learned about it from this Youtube video (AI sources that are more meat than fluff are hard to find, so let’s ignore the sexualized thumbnail, shall we?). AllAboutAI is my favorite source of practical ChatGPT knowledge because Kris often provides references to the original research paper the technique was presented in.