Last month, John Dougan and I presented this talk at the Best Practices Exchange conference at the Tennessee State Library and Archives. This blog post contains our slides and talk notes, with John's presentation followed by mine.

[John Dougan] Over the last three decades the Missouri State Archives has maintained a robust distance volunteer indexing program that we now call our “eVolunteers”.

Our virtual indexers deserve a significant portion of the credit for the volume of records we have accessible on our website. We were using “citizen archivists” well before Archivist of the United States David Ferriero popularized the term.

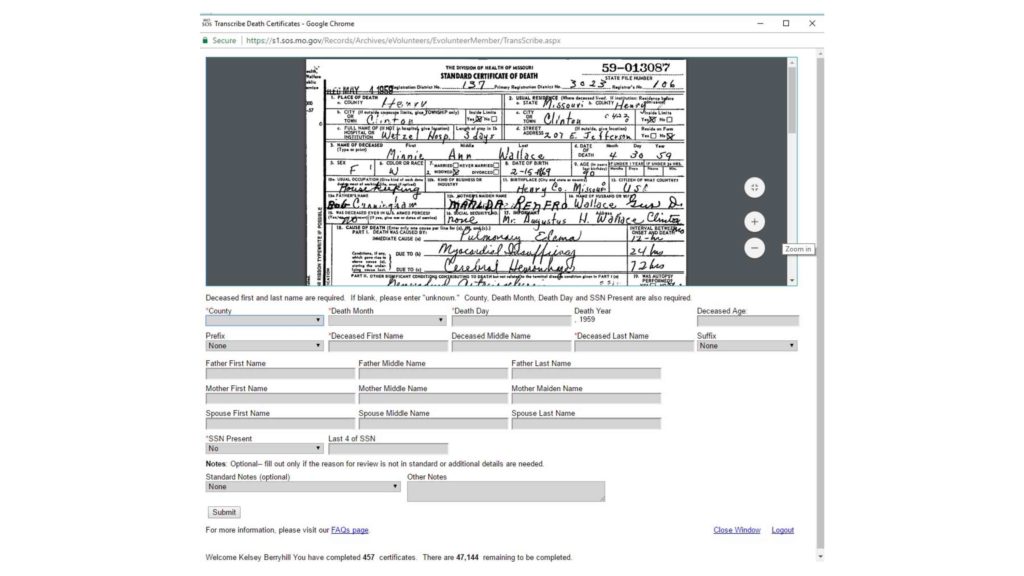

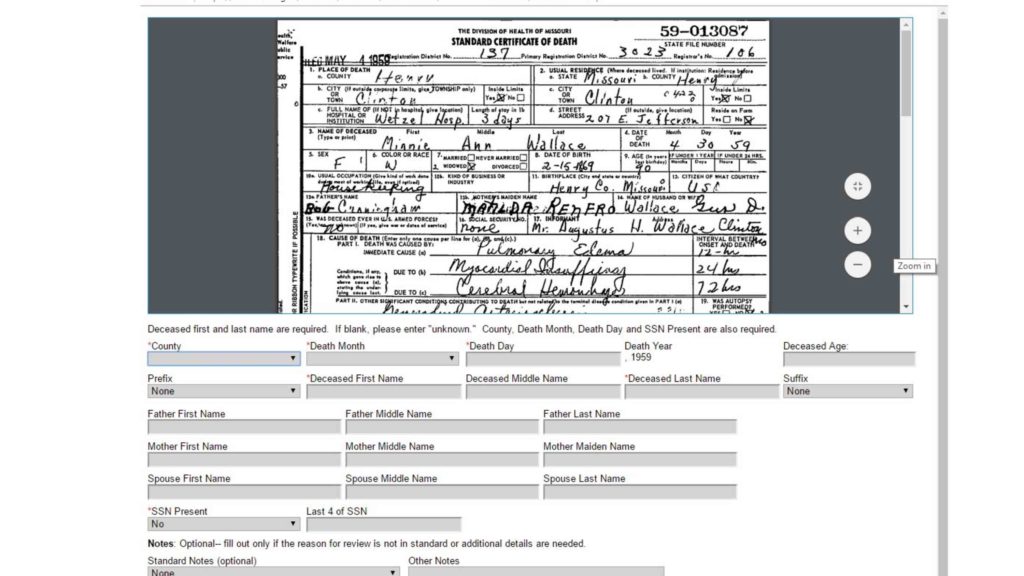

Initially our distance indexing was performed with mailed out photocopies and Microsoft Excel templates. Today we still we have a few volunteers who get images through our file share and enter them into spreadsheets, but most of our indexing in done online through 1) our in-house death certificate indexing platform, 2) projects with FamilySearch, and 3) the new field-based transcription options in the FromThePage app which were developed in partnership with the Council of State Archivists (CoSA).

Looking back at many of our older databases, the biggest mistakes we made were from improper analysis of project goals.

We didn’t get what we expected out of the meat grinder. We thought we were creating a curated charcuterie board but we ended up with Vienna sausages—still edible, but definitely not what we wanted.

- Insufficiently analyzing the records, 2) improperly framing indexing inputs, and 3) ignoring obvious search expectations

a) added unnecessary burdens to indexing or b) left gaps in information sought by researchers.

We were particularly late to the concept of attaching images of the primary sources to many of our databases. (Excuse—digital storage; multipage images and large volumes)

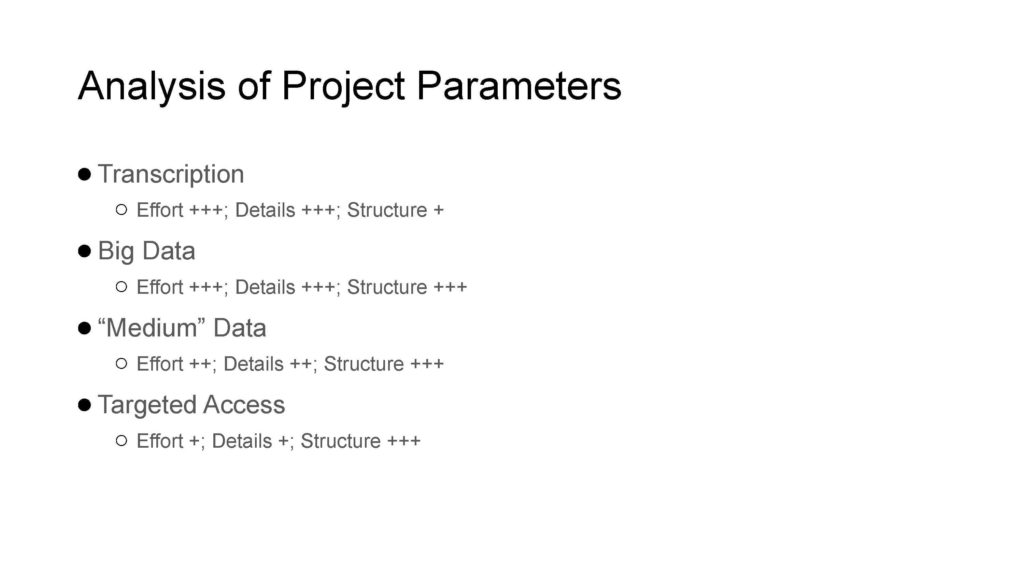

My analysis of approaches to indexing:

- Transcription

- best for narrative content that requires keyword searching;

- requires considerable proofing

- Big Data

- best for uniform scientific data

- requires data normalization (a lot if the data is not standardized)

- “Medium” Data

- best for tabular records with cross-sectional interests

- great for indexing highly searchable data while skipping less searchable data

- data normalization is still required but with less data there is less effort needed.

- Judicial/Supreme Court-–genealogists and historians—people and historical content

- Targeted Access -- Index only what you need to search and retrieve the image

- fastest but often leaves a lot to be desired

- best for a single niche audience (provides the best ROI for for-profit companies and archives)

- best examples--all the awesome genealogy websites we use;

- but, for historical researchers you have to browse through rolls and rolls of digitized microfilm images

Initially we indexed our death certificates from the printed indexes our state Vital Records Division had created prior to 1992.

Mistake #1—They didn’t retain their databases—so we had to re-index them. We also did not know they had a 10% error rate that we added another 4-5% of errors with our single pass indexing

Mistake #2—Vital Records lost the printed index for one of the years so we had to develop and online indexing application to use when that year’s death certificates were released. We continued to index the same limited fields—Deceased names, death date, county and the certificate number so that it could connect to the images.

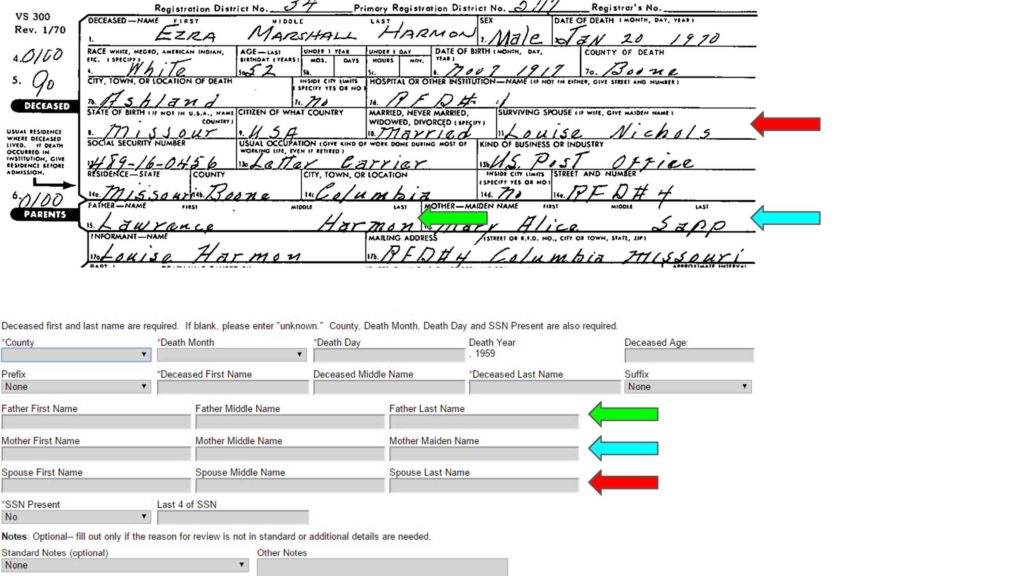

Mistake #3, --As part of a threatened lawsuit we began indexing the last 4 digits of social security numbers so we added age, parents names, and spouses names as well. This is the template above. We still sometime wish we had also added race and gender and, if the handwriting was more readable and doctors could spell, the cause of death. -- But where do you draw the line???

What about cemetery, funeral home, residence, address…

All this is to say you need to make a methodical, contentious decision of where you are going to draw the line. Under the mistake #3 model we indexed a year’s death certificates in two days; now we index the same 52,000-54,000 certificates in 4-6 weeks.

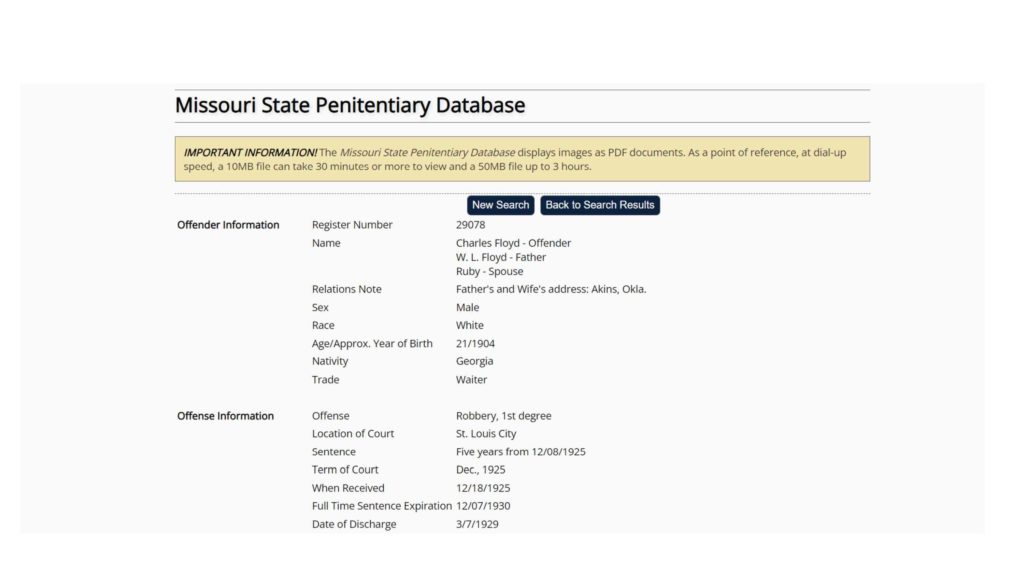

Lessons leaned from one of our spreadsheet projects. Started in 1999 and still ongoing, the Missouri State Archives’ Missouri State Penitentiary transcription, -- now indexing project -- has indexed 125 years worth of records in 24 years.

Our main takeaway on this project is don’t index what you cannot search. A lot of effort was taken to transcribe everything on these records but the online search for keywords often provides too many hits by bundling horse tattoos with horse stealing when you search for horse, or with no results as some fields are not mapped to the search to try and avoid more instances of this happening. A lot of work went in a little reward.

My Analysis only

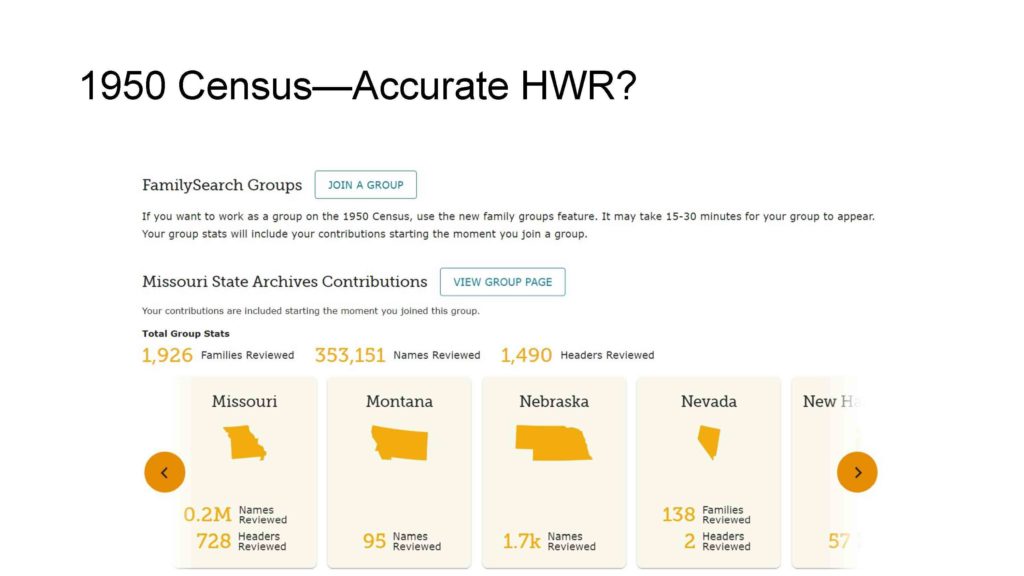

Our MSA eVolunteers helped verify the 1950 Census but we were not involved directly in creating any of these projects

National Archives--used AmazonWebServices to run “HWR” (HandWriting Recognition) on millions of pages from the 1950 Census so they were accessible on day one

NARA’s platform also allows for corrections—which is great because the HWR was not great—okay to pretty bad. The browse tools allowed reasonable access

Contrast that with Ancestry/FamilySearch—Ancestry’s proprietary HWR worked extremely well, online for review by FamilySearch relatively quickly, but there were a few anomalies: the programmers missed programming known exceptions that were in the instructions, so the system tried to make names out of no one home and vacant

FamilySearch and Ancestry also both allow corrections and FamilySearch volunteers verified the data within months of the release

Lesson hopefully learned

- QC will always be required

- The outlook of HWR looks promising

in addition to Ancestry’s success

- FamilySearch-paragraph style Spanish language product;

- Adam Mathew Digital-SAS product

- And--read the original directions and program in anticipated anomalies

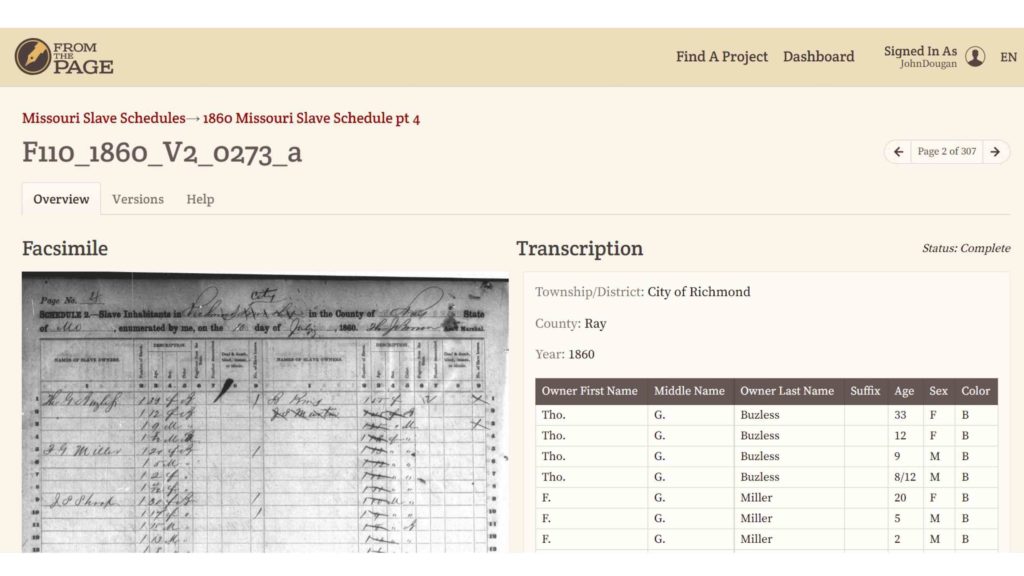

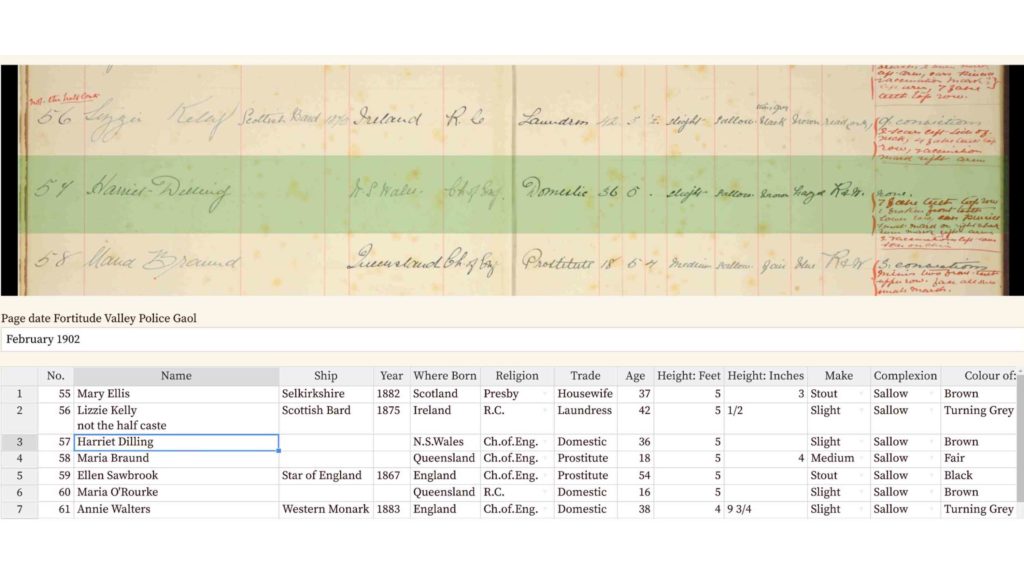

Example of a “Medium” to “Targeted” Indexing Project

MSA eVolunteers indexed the 1850 and 1860 Federal Slave Schedules for Missouri. The enslaved individuals were not enumerated by name unless they were over 100 years old, so we ended up indexing the year, county, township, owners names, age, sex, color and unique notes like if they were blind, deaf, listed as insane, or had a separate employer.

We did not index the number of slave houses, which was a separate column for 1860 and would have been very interesting to know, nor did we index the fugitive column. This was because both were filled out inconsistently, sometimes with no information, sometimes every individual was marked, and in others the information was obviously incorrect.

Staff members conducted line by line quality control on the geographic location information, names and notes but only did project-wide data normalization on the age, sex and color fields.

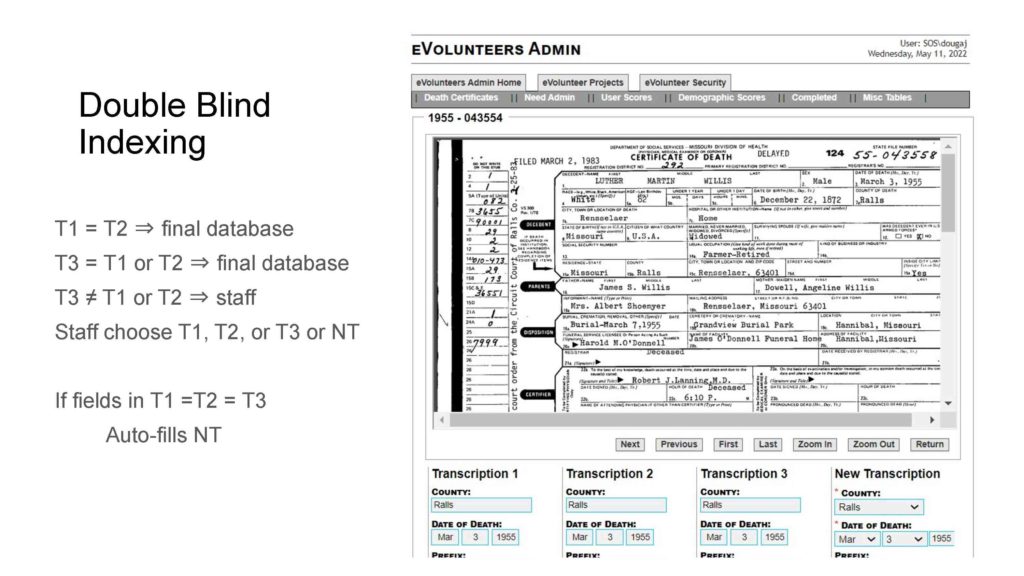

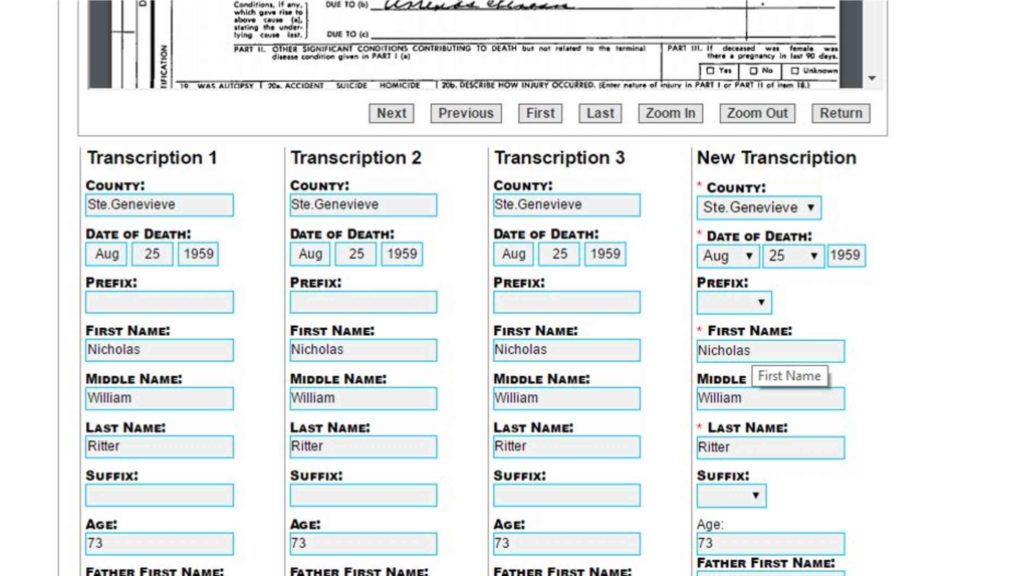

Double blind indexing produces the most accurate results.

- T1 = T2 ⇒ final database

- T3 = T1 or T2 ⇒ final database

- T3 not = T1 or T2 ⇒ staff who can choose T1, T2, or T3 or enter a New Transcript

New Transcript auto fills fields where all 3 transcripts agree

IT Don’t ask can you do x, ask what is the best way

[Ben Brumfield] I'm Ben Brumfield, and I run the FromThePage crowdsourcing platform John mentioned. In this talk, however, I'd like to talk about a the results of a research project I did during the early months of the pandemic. It was a strange time, and called for some strange work.

John has presented on the practices at the Missouri State Archives. Their eVolunteer transcription platform presents a web form to volunteers underneath a PDF of a death certificate, asking users to transcribe from the certificate into form fields.

Each certificate is transcribed by two different volunteers, and the results are compared with each other. If they match, the form is considered done. If not, the same certificate is presented to a third volunteer to be re-keyed, then--in case of no consensus--shown to a staff member.

Their platform provides an ideal data set for analyzing quality in crowdsourcing projects. But how should we analyze errors?

For that, I'd like to take you back to a much older institution.

The Library of Alexandria acquired copies of literary works brought into Egypt during the Hellenistic age. As a result, they held many different versions of the same poems, plays and histories.

Founded a hundred years later, the Library of Pergamon rivaled the Library of Alexandria, attempting to build a collection to match or surpass it. The two libraries competed to hire the best scholars, especially of the highest prestige literary works, the Iliad and Odyssey.

Scholars at both institutions struggled to make sense of the variation they found in different copies of the Homeric epics. The Alexandrians, influenced by Platonism, sought to put together a version that matched their ideal of what Homer would have said.

By contrast, the Pergamese were stoics, believing that mortal perfection was impossible. Rather than pursuing an ideal, they sought to identify mistakes in the copies they possessed, to try to figure out the most authentic version of the text. They reasoned from documentary evidence, rather than aesthetic ideals.

This was the beginning of textual criticism. Later scholars would use similar approaches on the Greek playwrights, the Hebrew bible, the New Testament, classical Latin texts, and medieval vernacular literature.

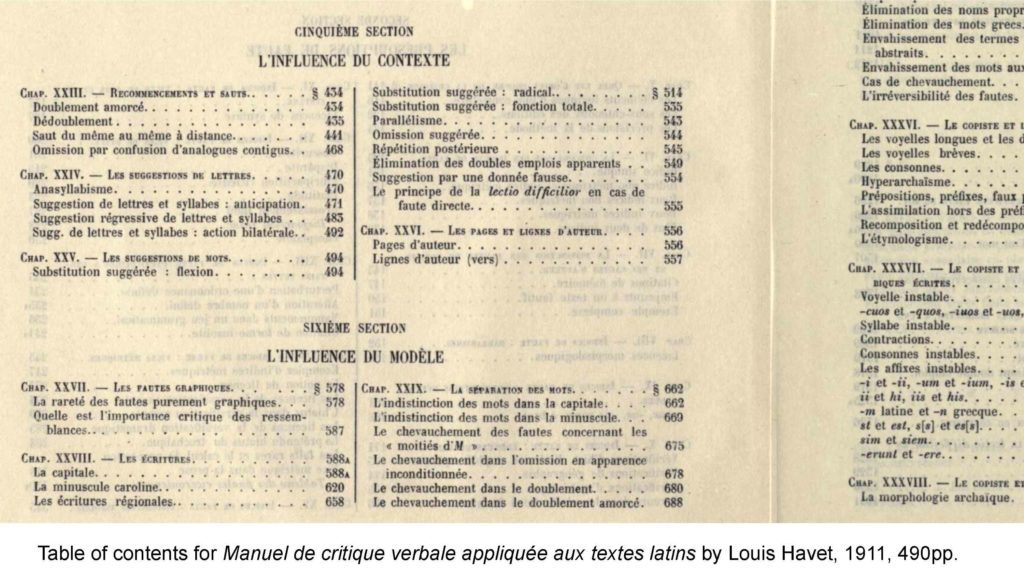

By the beginning of the 20th century, you had comprehensive guidelines of errors like this 490-page book on scribal mistakes. You can errors broken down to specific problems with caroline minuscule script, or capital letters, for example.

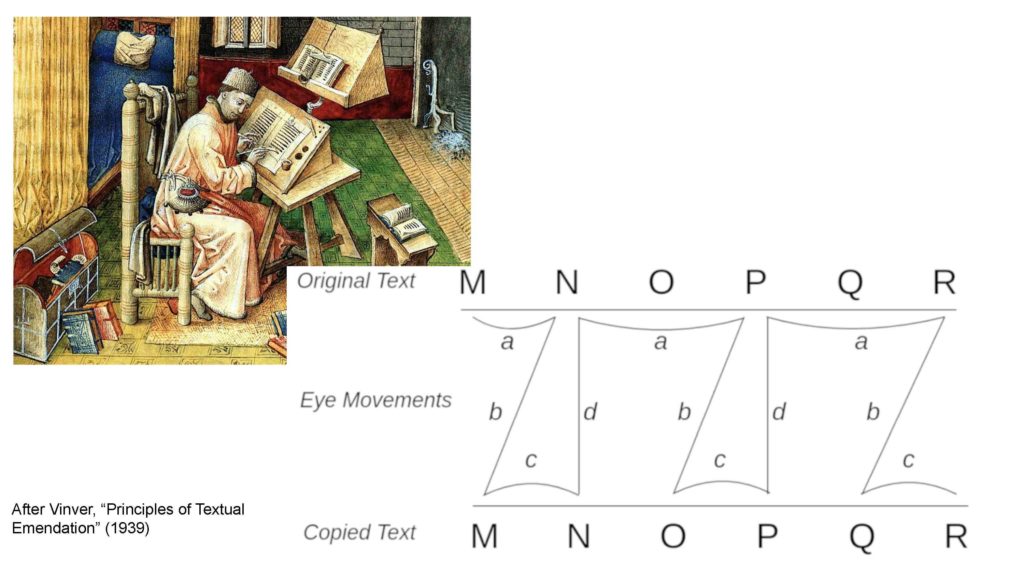

In the 1930s, Eugène Vinaver had classified scribal errors based on where the scribe was looking when an error happened: did they mess up reading from the exemplar, writing onto their copy-text, or when their eye was switching between the two?

This seemed like the perfect framework for analyzing transcription errors in 21st century crowdsourcing projects.

John, Christina, and the folks at the Missouri State Archives were kind enough to provide me with the raw data of their recently-completed 1970 Death Certificate indexing project. I pulled out all of the transcriptions that had been classed as errors, and manually classified the first thousand, looking at the specific fields that were differently transcribed by each user, comparing them against the original death certificate.

Here’s what I found.

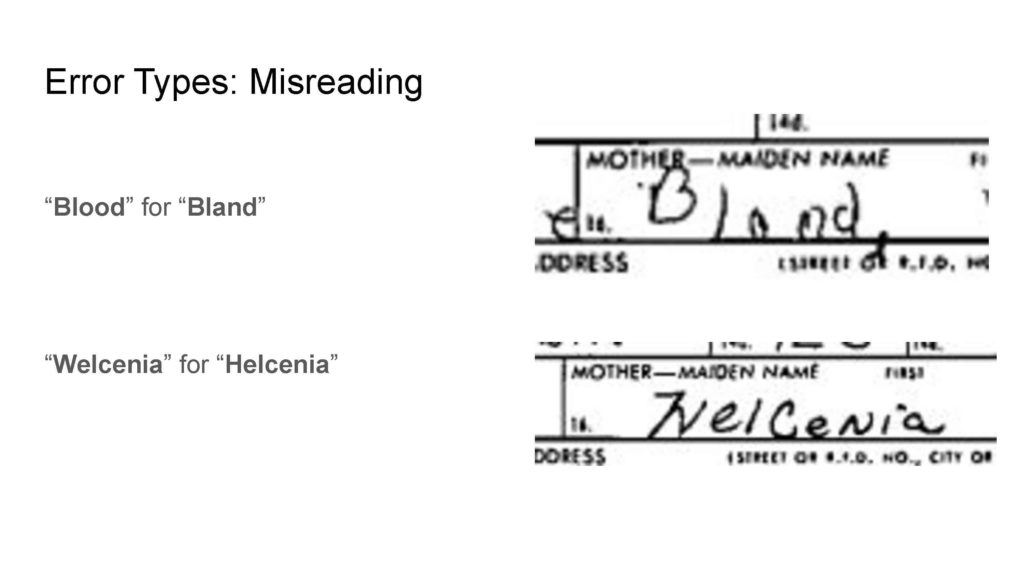

Misreading happens when the user is looking at the original certificate but can't quite make out what the letters say. Here a user typed "Blood" for "Bland", because the "a" and "n" are indistinct. Next we see the name Welcenia for Helcenia because of a strange capital-H.

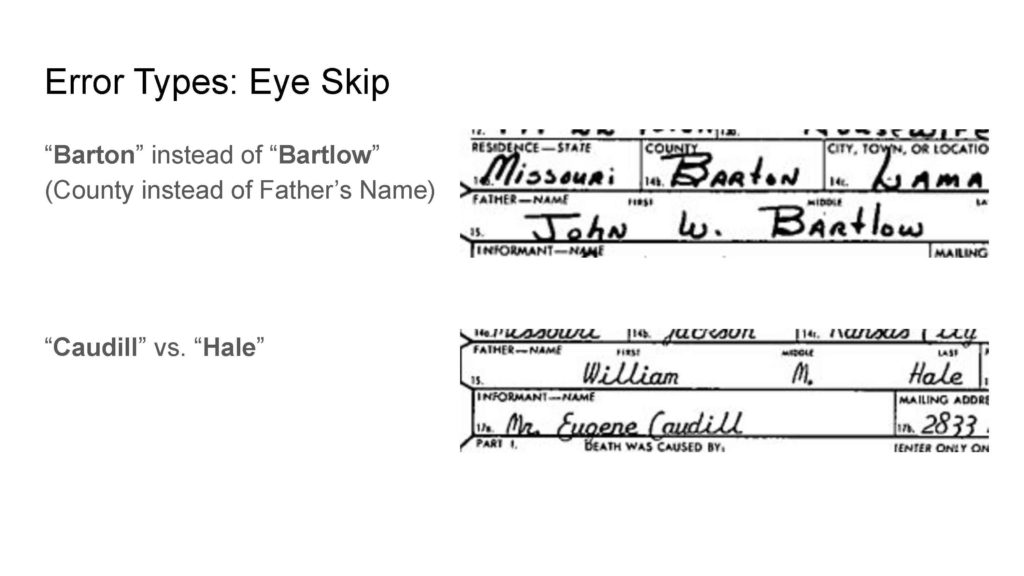

Eye skip happens when a user reads from the wrong field. This likely happens when they've looked at their keyboard or at the web form as they type the previous word, then look back to an incorrect place in the certificate. Here you see a Fathers Name transcribed as "Barton" instead of "Bartlow", as the user read from the county name field. Next you see Father's Name transcribed as Caudill instead of Hale, reading the Informant surname instead of the Father's surname.

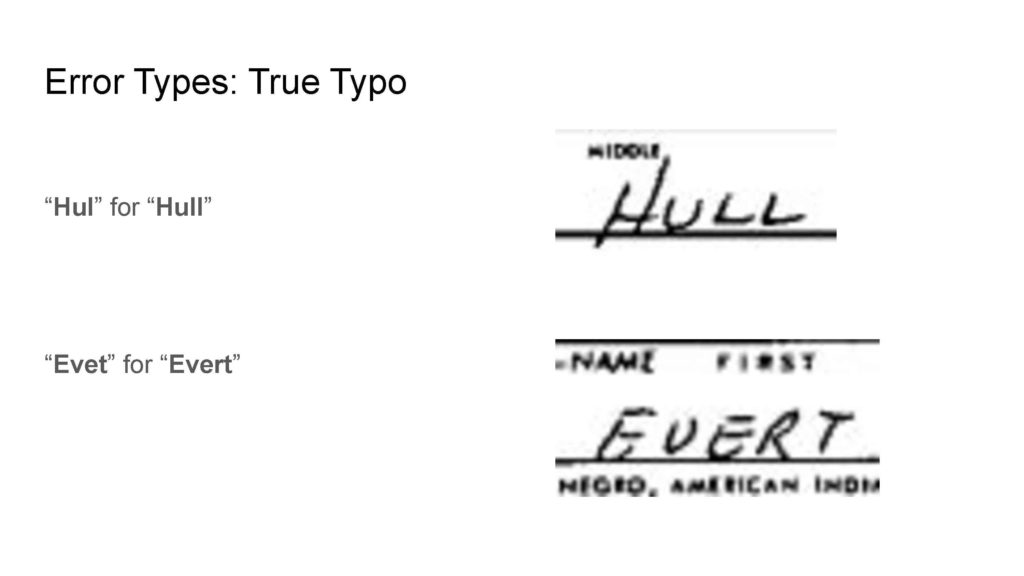

True typos are exactly that – an entirely mechanical error. In both of these examples, the transcriber has just left out a letter.

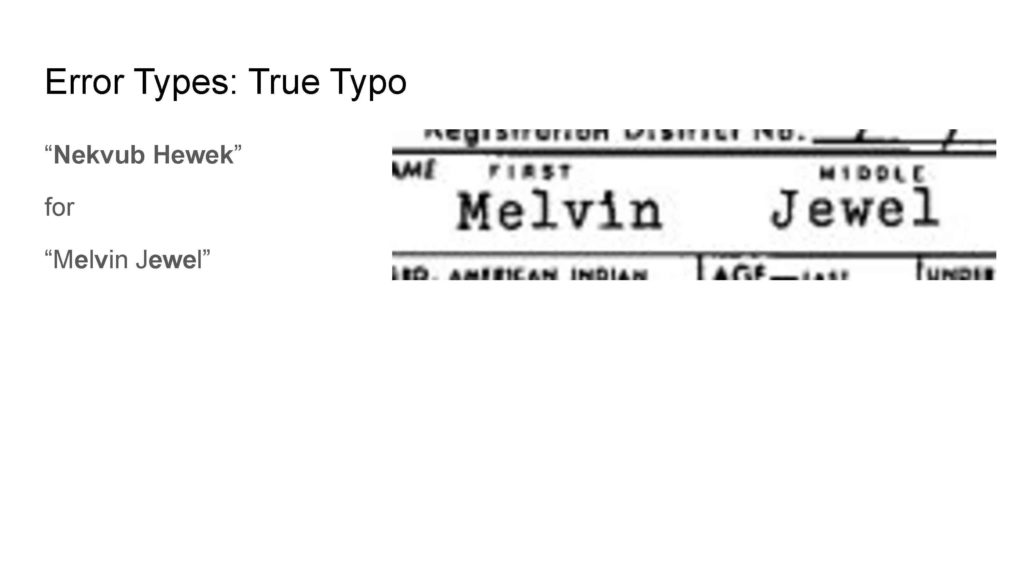

A more interesting example is Nekvub Hewek for Melvin Jewel. Can anybody tell me what happened here? [pause] That's right, the user's right hand has slipped over by one set of keys. (I don't think this is the kind of mistake a medieval scribe would be able to make.)

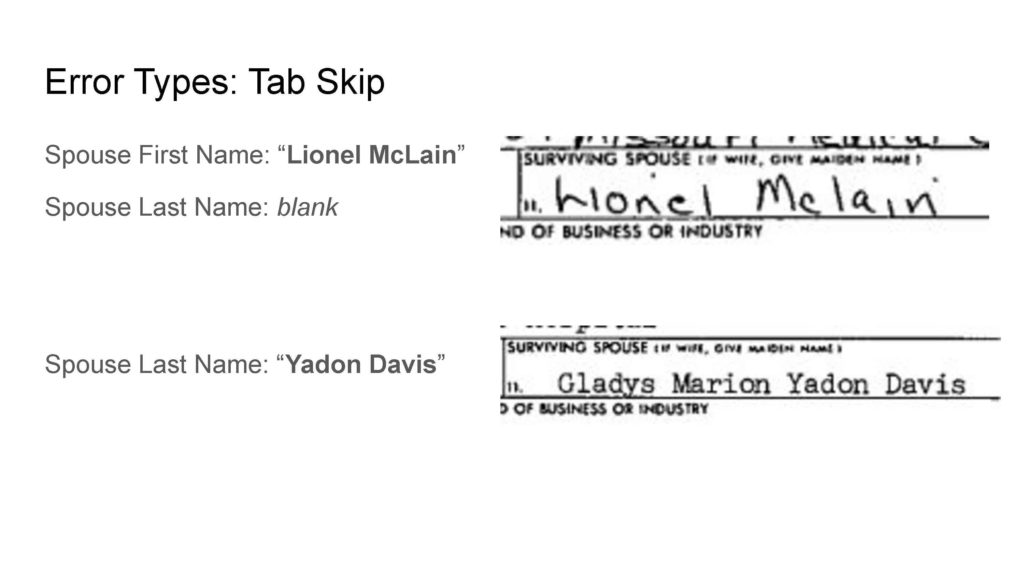

Tab Skips are another kind of mistake made by touch-typists who never review the form. In these cases, the user attempted to tab from the first name field to last name field, but missed the tab key, so both names went into the first field. I believe that the second example is of a premature tab, sticking middle and last names in the last name field.

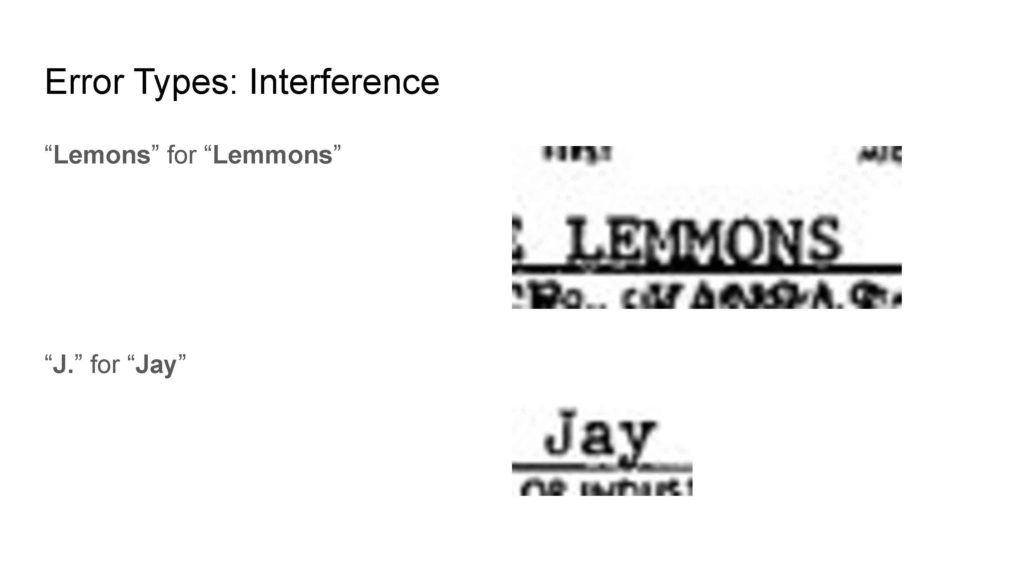

Interference is a kind of mental error, in which the transcriber's brain supplies a "better" version of the word they are transcribing, such as the fruit Lemon instead of the surname Lemmon, or the letter J for the name Jay.

In some cases interference is caused by external stimulus, when the transcriber accidentally types a word they hear in conversation or on TV, having nothing at all to do with the text! If you've ever tried to have a conversation while you write an email, you know what I mean.

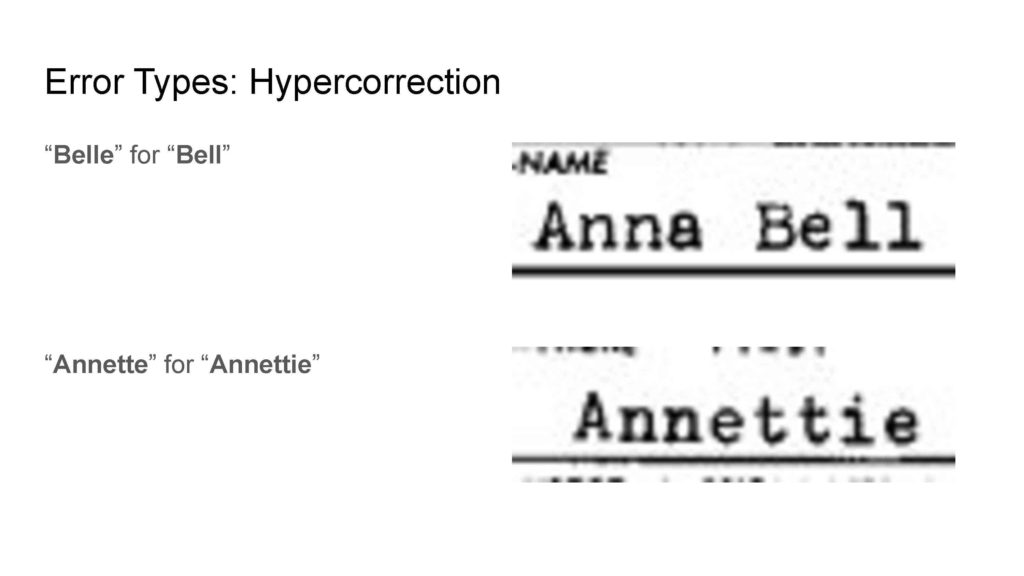

A particular kind of interference is hypercorrection -- when a user types what they think is the correct form instead of what's actually written. Here you see the feminine given name "Belle" replacing the family name "Bell" in this person's middle name. Below, the "Annette" replaces the less-common "Annettie".

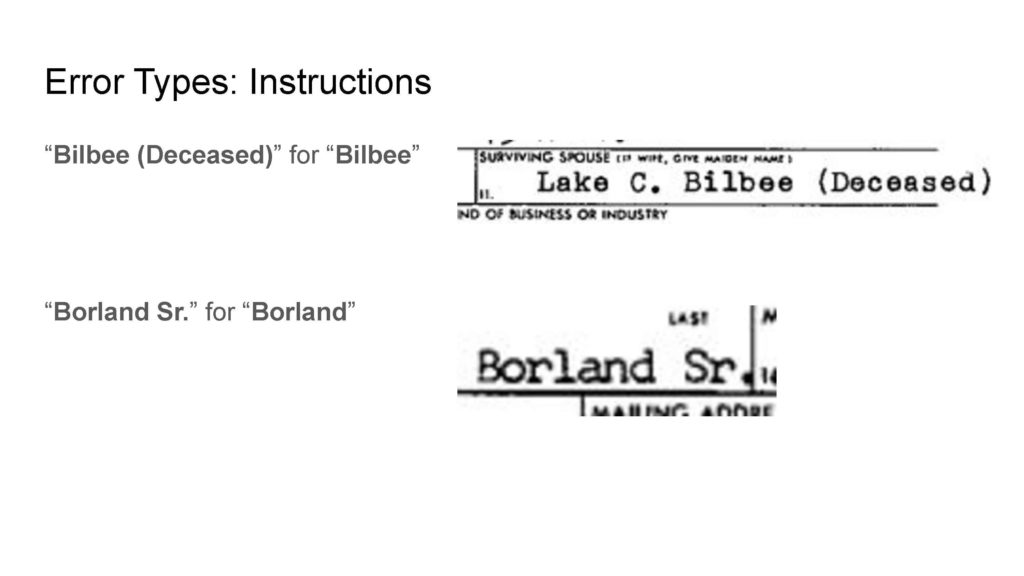

Instruction errors are simply the failure to follow the project instructions. The eVolunteer project asks that users not add clerical commentary like "Deceased" or name suffixes like "Sr.", but these users have done so anyway.

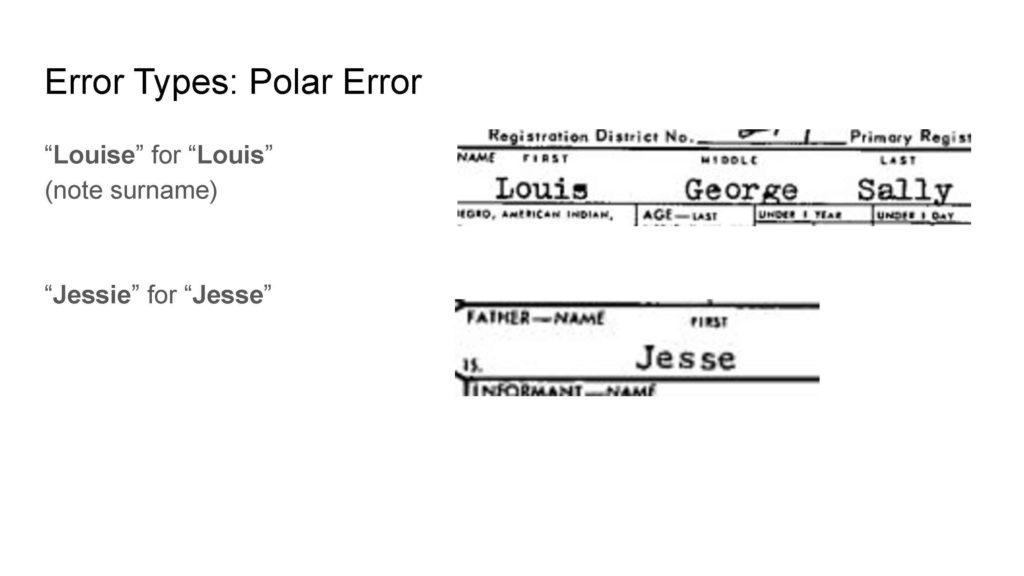

Polar errors happen when a word is replaced with its mental opposite, like "North" for "South" or "hot" for "cold". This happens a lot with gender, and we see it here with "Louise" replacing "Louis", or feminine "Jessie" replacing masculine "Jesse".

Finally, two error types were really unclassifiable: blank fields and 0-valued numeric fields just didn't give me enough information to speculate as to why these mistakes were made.

There were also a handful of errors in which I disagreed with the consensus, and decided that the "error" was actually correct.

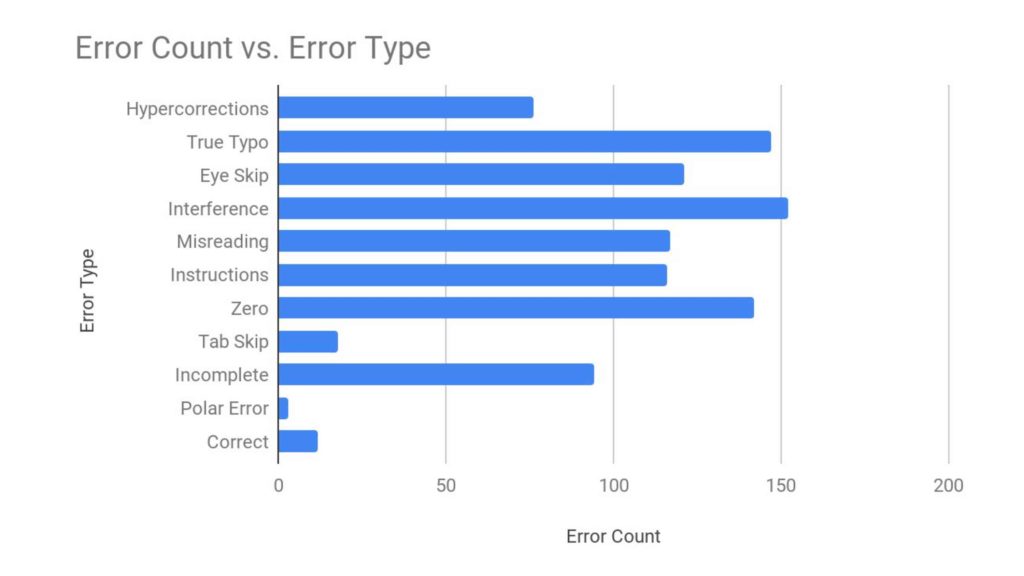

After classifying the errors, I plotted their frequency. I was really expecting to see a single error type--probably hypercorrection--represent the majority of the errors, so was astonished to see a fairly even distribution.

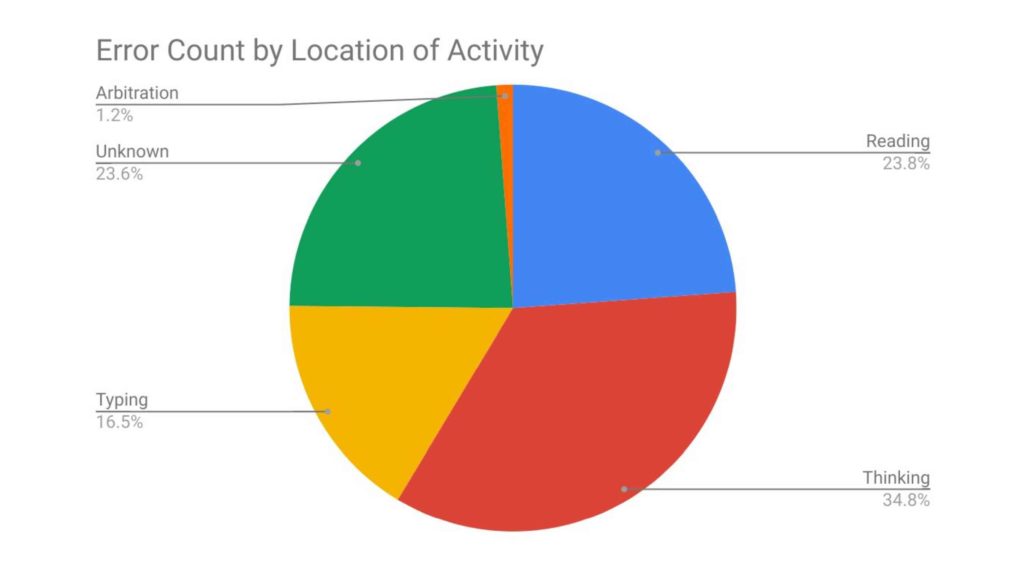

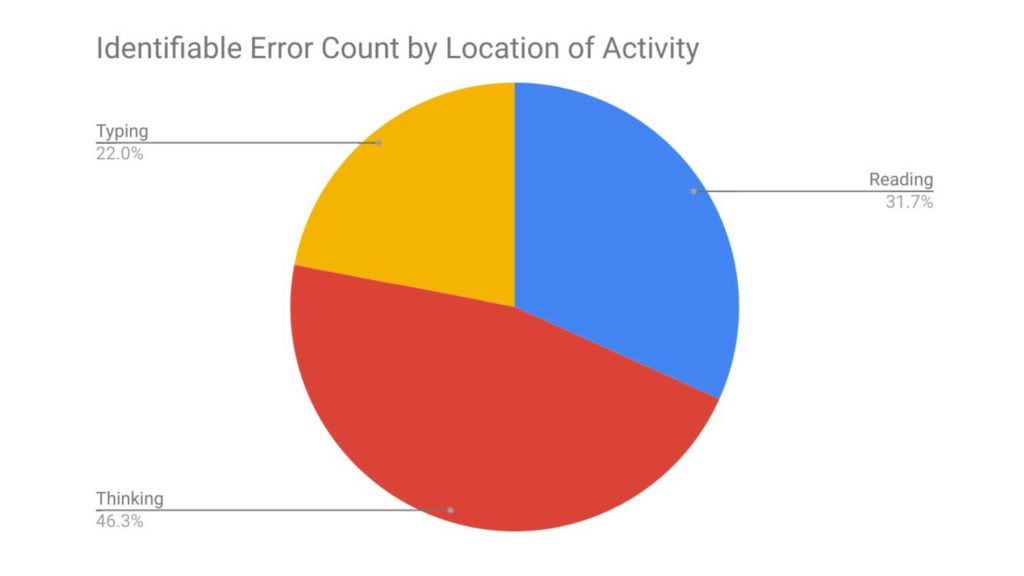

If we aggregate those by where they occur -- Reading, Thinking, or Typing.

Then eliminate the unidentifiable error types like incomplete fields, we see these results.

As a technologist looking for technical solutions, this is pretty disappointing -- even if we improve reading and typing that account for slightly more than half of the errors, leaving a substantial number that are basically introduced by the user's environment or their own brain.

What are the implications for those of us running crowdsourcing projects or using crowdsourced data?

One implication is that form layout matters.

One of the most common errors was eye-skip (representing 121 of the 1000 errors I classified). Most of that was due to a mis-match between the layout of the death certificate and the layout of the web form. Users often missed the surviving spouse name, because it does not follow the father and mother name on the certificate, but is the next question on the form.

Changing the form to match the certificate would have certainly helped avoid this.

Another suggestion is better image highlighting.

In the spreadsheet transcription feature for FromThePage developed with CoSA, we created the ability to highlight the portion of the image that corresponds to the row a user is typing in. If this were done on a field-by-field basis for projects like the death certificate index, almost all eye skip problems could be eliminated.

Those still can't affect those mental models, however.

John's been trying to convince me for years that blind double keying is necessary. This research has convinced me that at least some double-checking or double-keying is needed for projects that need a high level of accuracy and can afford the labor expense.

My partner Sara and I often tell project owners on FromThePage that data quality isn’t one-size-fits-all. The quality needs of a project providing full-text search of colonial court records are a lot lower than the needs of a project indexing vital records for living people; increased quality–whether through review or double keying–means increased labor.

However, perhaps we can differentiate quality within projects in addition to between them. The 1970 Death Certificate project on eVolunteer collected twenty one fields. Is Month of Death as important for researchers as Decedant Surname is? Perhaps only the most important pieces of metadata could be double-keyed.

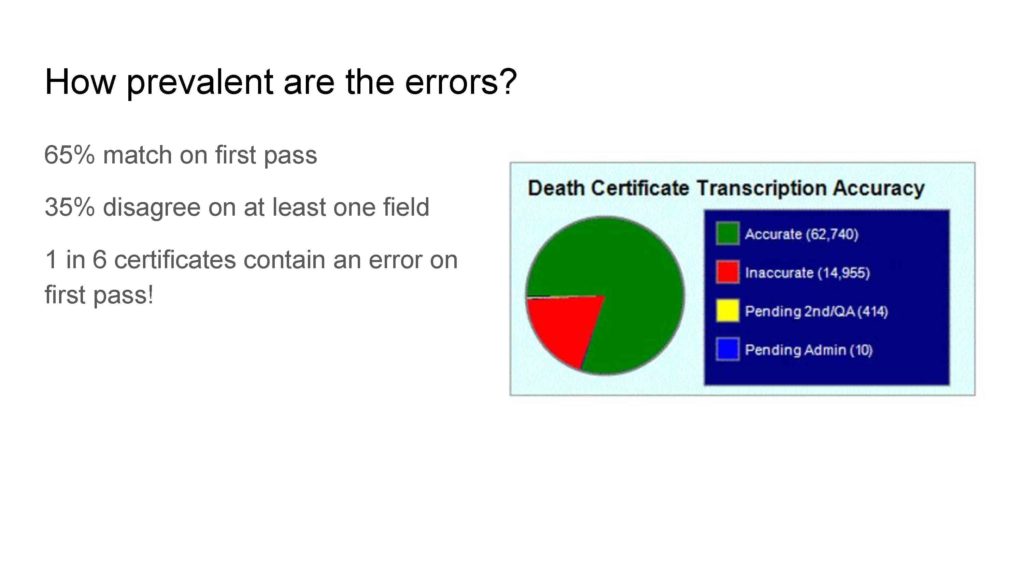

The eVolunteer portal sees about 65% of certificates pass the original two-person transcription, while the rest have errors and about 15% go to staff arbitration. If half of the initial 35% were correct, I project that an unreviewed single-keying system would have mistakes on one out of six cards. That's pretty significant!

Another implication is to plan for errors in your data. If images of original documents are always delivered to researchers, at least those images can be consulted.

Using fuzzy searches in finding tools can also mitigate the presence of errors, since a fuzzy search on Annette might match Annettie.